This article describes PubMatic’s successful journey to build a private cloud data center infrastructure, the backbone of our infrastructure operations and part of our competitive advantage.

Implementing a reliable and effective IT infrastructure is vital to long-term business success. Those embarking on this journey have several options to choose from, including the public cloud, dedicated data center, and colocation implementation.

The cloud computing option has gained traction with companies, including small-to-medium-sized adtech providers. Cloud computing is an on-demand compute service providing access to utilities such as hardware resources, applications, compute servers (physical servers and virtual servers), data storage, development tools, and networking capabilities, hosted at a remote data center managed by a public cloud services provider.

Major players in the public cloud space include Amazon, Google, and Microsoft. There is no initial capital investment to utilize them, as one pays for the services used, and the ease of use and speed of implementation can be appealing as well. If users don’t properly manage these resources there can be a negative financial impact due to growing hosting costs; as customer scales, the costs scale as well.

A decade ago, PubMatic started using a managed hosting model. Since then, we migrated to the public cloud, using Amazon AWS, and eventually to a private cloud infrastructure. Currently, we are using our own colocation to operate and scale our private cloud infrastructure.

A private cloud is a cloud service that is not shared with any other organization. The private cloud user has the cloud to themselves. By contrast, a public cloud is a cloud service that shares computing services among different customers, even though each customer’s data and applications running in the cloud remain hidden from other cloud customers.

Dashboard used in the NOC to manage and monitor global infrastructure.

Journey from Public Cloud to Colocation

The public cloud can be a good lower-cost option for startups that need immediate computing and reporting solutions. It can be helpful for exploring, testing, and validating a business idea or a new workload.

For PubMatic, moving to the private cloud was based on assessments of multiple data points such as data volume processed, on-going compute needs, cost of renting compute, and associated network bandwidth costs. It was an easy decision to have fixed costs with an environment that could scale for our business. We did not want to follow a model wherein one pays for what they use in a public cloud setup, which can be a huge variable cost.

The public cloud presents some challenges regarding security, managing costs, compliance, network latency, and ownership. The private cloud offers ease of infrastructure management, especially in the areas of network latency, dynamic DNS load balancing, and security compared.

We have found the opportunity to use the cloud to validate business opportunities in new markets. Once an idea is proven in a given market, and meets a certain cost threshold and other parameters, we will then explore a colocation model. This is one way to contain and reduce operational costs across all locations.

Colocation to Private Cloud Model

A colocation model provides a fixed compute cost based on an initial investment to handle a particular traffic volume. The drawbacks are in terms of flexibility to accommodate new business needs and the time for procurement and set up, especially when needing to accommodate multiple locations. Normally, building additional capacity might take 45 to 90 days, based on market conditions such as availability of hardware, logistics, colocation space, and power at a given site.

In order to use an ideal combination of solutions available for the growth and innovation to support our global customers, PubMatic developed a hybrid cloud setup. Through this model we have been able to use the public cloud for burstable frontend capacity, which helps us with faster deployment. This also reduces the cost of the compute investment for peak seasonal and immediate business needs. This gives us the flexibility to build capacity in colocation centers if there is a sustained increase in capacity required.

We also used the public cloud as a backup for business continuity, part of a strategy to maintain 99.9% uptime and reduce costs. Maintaining an autoscale skeleton infrastructure in the public cloud is an excellent, economical solution.

Introduction of the Private Cloud

We introduced our own private cloud to leverage compute capacity for use during non-peak seasons and to run additional workload. We implemented the private cloud to enable cloud management features and automatically scale with our internal hardware. This helped us to get maximum use of our computing resources during peak and off-peak times. This also enables PubMatic to leverage unused resources to execute non-critical workloads during off-peak times. The result is cost savings through a strategy focused on a demand model for utility as priority, as opposed to a focus on hardware investment.

Colocation Data Center – Selection, Design, and Build

What is colocation?

A colocation center is a type of data center where equipment, space, and bandwidth are available for rental to retail customers. Colocation facilities provide space, power, cooling, and physical security for the server, storage, and networking equipment of other firms, and also connect them to a variety of telecommunications and network service providers with a minimum of cost and complexity.

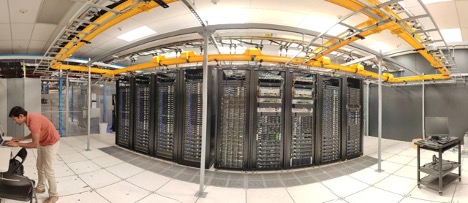

Our colocation facility in Virginia where we process backend data.

Our colocation facility in Virginia where we process backend data.

While location and customer proximity are the most important factors for any successful data center operation, many factors should be considered, including internet service provider density, latency of customer endpoints, future growth options, data center provider certifications, and technical specifications. Keeping your computing closer to the customer compute setups can help to reduce data processing latency. In the programmatic technology industry, every millisecond counts, so any reduction in latency can help our customers act on data faster and win advertiser bids – improving efficiency and efficacy.

We are able to reduce costs through weighing the above parameters and implementing a dual vendor strategy in each location. PubMatic’s technology is designed so that there are no dependencies on any vendors or any one physical location. At any point in time, we can migrate traffic within a geographic location with minimal impact to processing ad impressions.

Connectivity to the internet and peering exchanges also play a vital role in deciding the location and data center provider. We peer with most of our primary partners to reduce the latency and operational cost of internet bandwidth. Most importantly, we measure latency with all of our customers before we finalize plans for a location or provider.

A high-availability facility on the West Coast.

A high-availability facility on the West Coast.

Our colocation data center design is standardized across the globe, with a modular approach for large and small facilities. We have any not observed a single failure with our design, given that a core tenet is to always ensure a highly available and redundant colocation data center across network and system components. The redundancy strategy begins with how applications are designed and rolled out on a cluster of servers — mitigating any potential single point of server failure. Resiliency and redundancy are at the core of all data center components, including electrical power, PDUs, server racks, network switches, network routers, firewalls, load balancers, ISP providers, and interconnectivity links between colocation sites. We have not only built redundancy at a component level but also at colocation center level. This means that in a given region, one colo can act as a backup to the secondary colo.

We believe we have mastered building out colocation facilities, and our infrastructure team has designed templates with inbuilt redundancy and high availability. The system integration team builds the racks within the predefined templates and conducts imaging and pre-configuration of hardware and networks. We do server burn-in at our systems integration site (this is a process to test and validate the integrated components of the server before it is deployed to a production environment) in order to identify hardware issues early on.

Racks ready for shipment at a system integration facility.

Racks ready for shipment at a system integration facility.

Finally, the fully loaded racks are shipped to the destination colocation center for installation. Once powered on, the servers are remotely accessible for further automated application deployments. Within hours, we deploy the servers in production, and the team regularly monitors the entire infrastructure.

Colocation Capacity Management

Data center capacity management is important for our day-to-day operations. We conduct real-time capacity monitoring for each application, and a well-defined threshold alert helps us add more hardware to increase the capacity as needed to handle traffic growth.

In addition, we work very closely with the business team to regularly plan for potential growth, allowing time to forecast for additional capacity. Most importantly, in crucial times we are always prepared to divert traffic to the cloud so that there is no latency or outages.

Managing and Maintaining the Infrastructure

At PubMatic we manage and maintain our infrastructure with an SLA of 99.9% uptime or higher across all components. All the components at each data center are closely monitored by the network operations center (NOC). We have developed an in-house data center infrastructure management tool (DCIM) for all areas with well-defined thresholds for infrastructure maintenance. There is no single point of failure in the design of our data centers. This strategy provides sufficient time to replace the faulty components in case of a failure — without any impact on production traffic.

Dashboard used in the NOC to manage and monitor global infrastructure.

Dashboard used in the NOC to manage and monitor global infrastructure.

Automation – A Key to Successful Data Center Operation

Fully automated capacity utilization dashboard.

Fully automated capacity utilization dashboard.

The growth in colocation data centers and the speed at which industries scale can lead to challenges presented by manual monitoring, troubleshooting, and slow remediation. PubMatic has applied automation in key areas to combat this, including data center build out, scheduling, monitoring, maintenance, and application delivery. Data center automation increases agility and operational efficiency and reduces operational costs by reducing the number of people required to perform routine tasks, and enabling the delivery of services on demand in a repeatable, automated manner.

To the Future

It’s important to remember that to remain competitive, technology companies need to innovate, experiment, learn, and adopt the latest technologies in a timely manner. In order to achieve the set goals, the system needs to be reviewed and refined periodically to maintain efficient use of any computing resources.

Last but not least, a strong team is a key competitive advantage in how infrastructure is designed, built, set up, and monitored. Every complex infrastructure project requires thorough planning and coordinated action plans across technology and logistical partners at all stages. Therefore, engaging the right people at the right time, through the right channels, with concise communication is important to the success of a project.

Our colocation facility in Virginia where we process backend data.

Our colocation facility in Virginia where we process backend data. A high-availability facility on the West Coast.

A high-availability facility on the West Coast. Racks ready for shipment at a system integration facility.

Racks ready for shipment at a system integration facility. Fully automated capacity utilization dashboard.

Fully automated capacity utilization dashboard.