Introduction

With over 600 billion ad requests and 1.4 trillion advertiser bids a day, PubMatic has always had a strong focus on innovative strategies for handling colossal data volumes. As PubMatic’s array of use cases expanded and our strategic focus on capacity optimization intensified, it became increasingly evident that our existing infrastructure–anchored by Spark 2.3 and Scala 2.11–was nearing its operational thresholds.

The migration to Spark 3 marked a pivotal milestone for PubMatic’s data processing capabilities. Beyond a mere technological upgrade, it was a strategic endeavor to unlock the full potential of our infrastructure investment.

Data Processing Pipeline

Our existing system has more than 100 Spark jobs distributed across a network of 6 interconnecting clusters. Each job was finely tuned and critical to our data processing pipeline. The challenge lay in ensuring that these jobs seamlessly transitioned to Spark 3 without causing disruptions or performance degradation.

Approach

As we worked through the migration, we concentrated on the following considerations:

- Thorough Analysis and Planning: Before diving into the upgrade process, we conducted a comprehensive analysis of our existing infrastructure and business requirements. This included assessing compatibility with new versions, identifying potential challenges, and outlining a clear roadmap.

- Testing and Validation: Testing is paramount to ensure the new platform would meet performance and reliability standards. We used separate, dedicated test environments to rigorously check for performance, reliability, and data integrity.

- Incremental Rollout: We decided to minimize business impact risks by adopting an incremental rollout strategy. We started with less critical spark jobs, monitored their performance, and ensured their data integrity before implementing more essential jobs.

- Rapid Rollback Capability: Recognizing the importance of risk mitigation, we implemented a rapid rollback ability using different branches in our version control system. Each branch represented a specific stage of the upgrade process, allowing us to quickly revert to a stable state in the case of any unforeseen issues or disruptions. This ensured minimal disruption to business operations and provided a safety net during the upgrade process.

Overcoming Challenges

We overcame several challenges to ensure a seamless migration, including:

- Dependent Jar Changes: Updates and changes to dependent jars required for our projects were managed through careful coordination and testing.

- Addressing Waiting State Issues: When we recognized that jobs were taking longer than usual, we investigated and discovered that the jobs were going into a waiting state. Adjusting property ‘yarn.nodemanager.aux-services’ resolved this, ensuring jobs were not blocked by resource constraints.

- Handling Duplicate Fields: The transition to Spark 3 introduced challenges with duplicate fields due to modifications in how data is handled. To tackle this issue, we embarked on a journey of code refinement and customization. Recognizing the unique nature of our data processing pipeline, we rewrote segments of our Spark codebase to address the specific challenges posed by duplicate fields. Our solution involved a meticulous examination of Spark’s internal workings, leading us to override the Spark AvroUtils native class. This strategic intervention not only resolved the case-sensitivity issue but also optimized data processing efficiency. This experience underscores the importance of agility and innovation in navigating migration challenges.

- Recognizing “_corrupt _record”: In Spark 3, queries disallowed referencing of the internal corrupt record column. This was mitigated by caching strategies.

- Managing CSV File Sizes: Spark 3’s default handling for null values increased data size. This was mitigated by explicitly setting the “emptyValue” configuration.

Key Highlights

Our migration to Spark 3 yielded significant achievements:

- Capacity Gains & Capex Savings: We saw an impressive increase of approximately 8% in daily transactions, translating to Capex savings of around 10%.

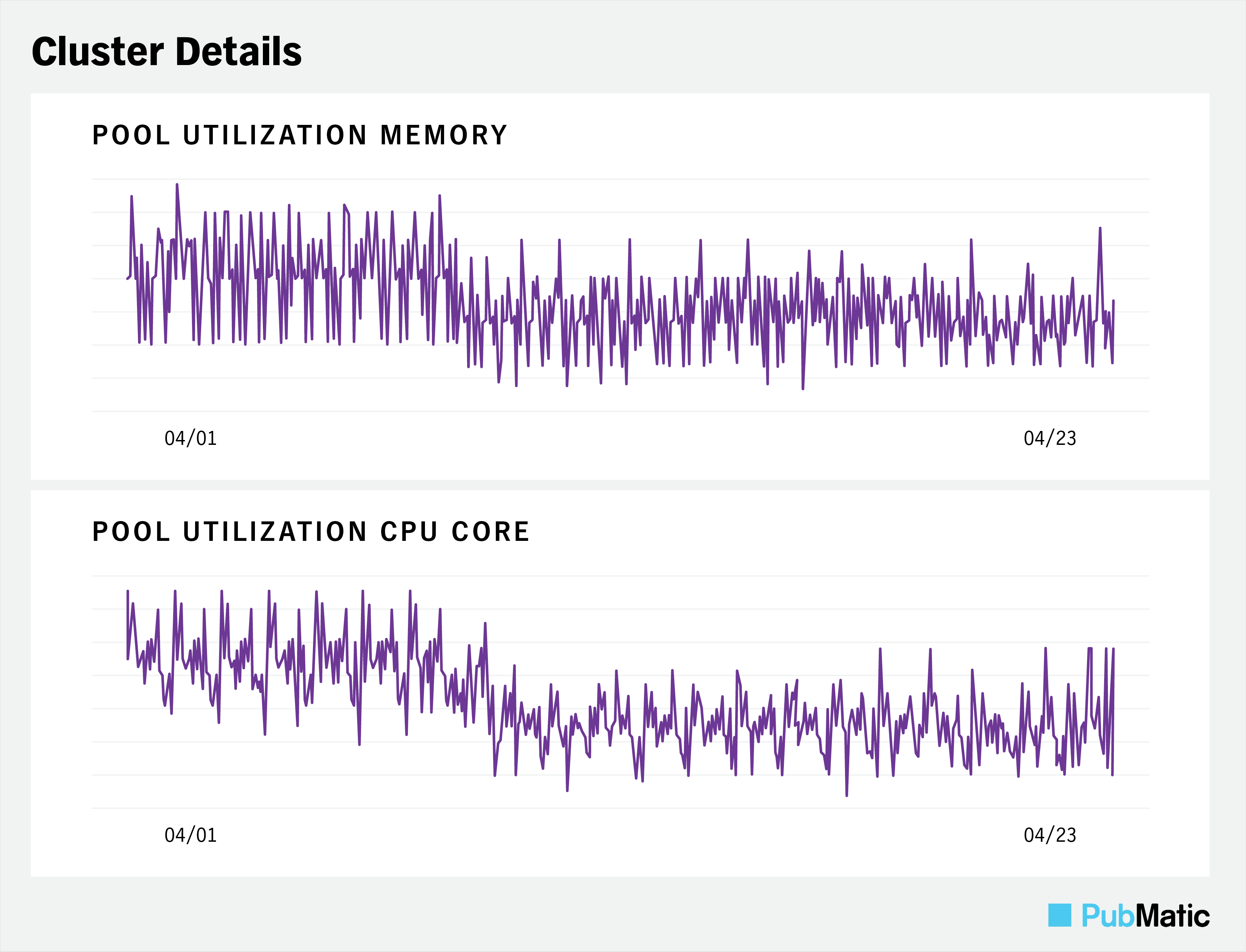

- Optimal Cluster Utilization: Resource utilization across all our Aggregation clusters dropped considerably, which eventually helped us to onboard new spark jobs without adding the extra hardware for some of our use cases that were planned. Here is the resource graph showing the substantial gain that we achieved

- Advanced Spark Features: Leveraging Spark 3’s advanced capabilities – including Adaptive Query Execution, DataFrame Join Optimizations, and Dynamic Partition Prunin – significantly enhanced job performance and efficiency.

- Inherent Support for ‘Spark-Avro‘: We benefited from inherent support for spark-avro, eliminating the need to rely on external libraries like Databricks ‘Databricks-Avro’.

Conclusion

At PubMatic, we take pride in our technical prowess and commitment to excellence. By embracing innovation and overcoming challenges with resilience, PubMatic continues to lead the charge in driving data processing efficiency to new heights from hardware acceleration to data management.

Stay tuned for more insights and best practices as we continue to innovate in the world of Big Data and analytics.